Data is good right? The more data the better. In fact, there is a whole segment of IT related to data call BIG data analytics. Operations have data, tons of it. Every technology devices spits out gigabytes of data a day. The question is figuring out how to filter data. It’s all about reducing that real-time stream of data into actionable information. Understanding service assurance correlation & analytics is all about focusing operations. That attention can produce that better business results. This blog details common concepts and what’s available in the marketplace. I want show the value of driving data analytics into actionable information. Value operations can execute successfully.

Maturity Curve for Service Assurance Correlation & Analytics

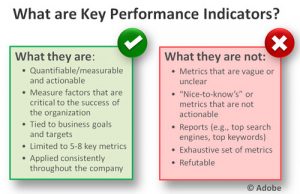

Let’s talk about terminology first. Correlation versus analytics is an interest subject. Most people I talk to, consider correlation to be only within fault management. Analytics includes TimeSeries data like performance and logs. Now I know some would disagree with that simplification, we can use it here to avoid confusion. Whether it be either term, what we look for is reduction and simplification. The more actionable your information is, the quicker you can resolve problems.

Visual Correlation & Analytics

First step on the road to service assurance correlation and analytics is enabling a visual way to correlate data. Correlation is not possible if the data is missing, so have unified collection is your first step. Once you have the data co-located you can drive operations activities to resolution. Technicians can leverage the tool to find the cause of the fault. Drill-down tools can help uncover enough information. Then the NOC techs can perform manual parent/child correlation.

Once executed, users of the assurance tool can also suppress, or hide, faults. Faults that are not impacting or known false errors become sorted out as “noise”. Assurance systems then leverage third party data to enrich faults. Enrichment would allow faults to include more actionable data. This makes them easier to troubleshoot. All these concepts should be second nature. Operations should have all these visual features as part of the assurance. Otherwise they are hamstrung.

Basic Correlation & Analytics

Once you have a tool that has all your data, you will be swimming in the quantity of that data. You must reduce that data stream. If not, you will overload the NOC looking at the stack instead of the needle. There are many basic level strategies that allow that reduction.

First, look at de-duplication. This feature allows you to match up repeat faults and data points. Which eliminates 80% of duplicate data. Matching “up” to “down” messages allow elimination of 50% of your data stream. Reaping jobs can close out data that are not deemed “current” or limited log data. Another common feature is suppressing faults. Suppression by time windows during scheduled maintenance or excluding business hours. Threshold policies can listen to “noise” data and after X times in Y minutes create an alert. These features should be available on any assurance platform. If yours lacks them, look to augment.

Root Cause Analysis Correlation & Analytics

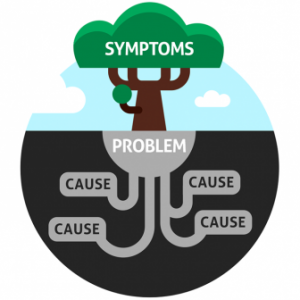

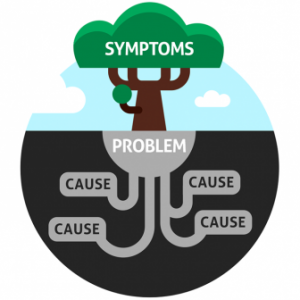

If you have a NOC with thousands of devices or tens of domains, you need cross domain correlation. Root cause analysis is key to reducing complexity of large access networks. Performing RCA across many technology domains is a core strategy. Operations can use it for consolidated network operations. Instead of playing the blame game, you know which layer is at fault. Leveraging topology to sift through faults is common. Unfortunately its not typical in operations. Topology data can sometimes be difficult to collect or of poor quality. Operations needs a strong discovery strategy to prevent this.

Cluster-based Correlation

Cluster-based correlation is another RCA strategy. This one does not rely upon topology data. The concept here is using trained or machine learning data. A written profile will align data when a certain pattern matched. The tools create patterns during troubleshooting process. Others have algorithms that align faults with time and alerts. Once the pattern matches, the alert fires causing a roll-up by the symptoms to reduce the event stream. This correlation method is popular, but hasn’t provided much results yet. Algorithms are the key here. Many challenge its ROI model that requires machine training.

Customer Experience Assurance

Next, RCA enables operations to become more customer-centric. Service oriented correlation allows operations to see the quality of their network. All through their customers eyes. Some call this functionality “service impact analysis”. I like the term “customer experience assurance”. Understanding what faults are impacting customers and their services enables higher efficient operations. The holy grail of operations is focusing on only root causes. Then prioritize action only by customer value.

Service Quality Management

Lastly, you can track customer temperature by moving beyond outages and into quality. Its important to under the KPIs of the service. This allows clarity on how well the service is performing. If you group these together, you simplify. While operations ignore bumps and blips, you still need to track them. Its important to understand those blips are cumulative in the customers eyes. If the quality threshold violates, customers patience will be limited. Operations needs to know the temperature of the customer. Having service and customer level insights are important to provide high quality service. Having a feature like this drives better customer outcomes.

Cognitive Correlation & Analytics

The nirvana of correlation and analytics includes a cognitive approach. Its a simple concept. The platform listens, learns, and applies filtering and alerting. The practice is very hard. Most algorithms available diverse. They are either domain specific (website log tracking) or generic in nature (holtz-winter). Solutions need to be engineered to apply the algorithms only where they make sense.

Holtz-Winter Use Case

One key use case is IPSLA WAN link monitoring. Latency across links must be consistent. If you see a jump, that anomaly may matter. The Holtz-Winter algorithm is for tracking abnormal behavior through seasonal smoothing. Applied to this use case, an alert is raise when the latency breaks its normal operation. This allows operations to avoid setting arbitrary threshold levels. Applying smart threshold alerting can reduce operational workload. Holtz-winter shows how cognitive analytics can drive better business results.

Adaptive Filtering Use Case

Under the basic correlation area I listed dynamic filtering. A fault can happen “X times in Y minutes”. If so, create alert Z. This generic policy is helpful. The more you use it, you will realize that you need something smarter. Adaptive filtering using cognitive algorithms allows for a more comprehensive solution. While the X-Y-Z example depends upon two variables, the adaptive algorithm leverages hundreds. How about understanding whether the device is in a lab or a core router? Does the fault occurs ever day at the same time? Does it proceeds a hard failure.

You can leverage all these variables to create an adaptive score. This score would be an operational temperature gauge or noise level. NOC techs can cut noise during outages. They can increase it during quiet times or sort by it to understand “what’s hot”. Adaptive filtering enables operations the ability to slice and dice their real-time fault feeds. This feature is a true force multiplier.

Understand the Value in Service Assurance Correlation & Analytics

The important part of correlation & analytics with service assurance is its value. You must understand what is freely available and it’s value to operations. This subject varies greatly from customer to customer and environment to environment. You have to decide how far the rabbit hole you want to go. Always ask the question “Hows does that help us”. If you are not moving the needle, put it on the back burner.

If you are not saving 4-8 hours of weekly effort a week for the policy, its just not work the development effort. Find your quick wins first. Keep a list in a system like Jira and track your backlog. You may want to leverage a agile methodology like DevOps if you want to get serious. Correlation and analytics are force multipliers. They allow operations to be smarter and act more efficiently. These are worthwhile pursuits, but make sure to practice restraint. Focus on the achievable, you don’t need to re-invent the wheel. Tools are out there that provide all these features. The question to focus on is “Is it worth my time?”.

About the Author

Serial entrepreneur and operations subject matter expert who likes to help customers and partners achieve solutions that solve critical problems. Experience in traditional telecom, ITIL enterprise, global manage service providers, and datacenter hosting providers. Expertise in optical DWDM, MPLS networks, MEF Ethernet, COTS applications, custom applications, SDDC virtualized, and SDN/NFV virtualized infrastructure. Based out of Dallas, Texas US area and currently working for one of his founded companies – Monolith Software.