Thanks again for all the great feedback on this blog series. I want to continue the ongoing discussion by guessing service assurance’s future with machine learning. There are infinite operational problems out their for providers and IT. Machine learning offers an inexpensive, yet expansive flexible way to solve problems. Here are some of the most extreme ideas I have thought of to common problems of the industry. If you have heard anyone tackle these with machine learning I would love to hear more about it.

“We Hate Rules” — Says Everyone

One common compliant I have heard from customers and partners as long as I have been in business is around rules. “We hate rules!” I don’t like rules by the problem this technology solves is a big one. How do I decrypt vital fault details in a variety of different ways into operational actionable events? Right now people have compilers to take SNMP MIBs and export them into rules of some sort. From HPOV to IBM Netcool to open-source MRTG – its the same solution. What if? Is it possible to apply machine learning? What if automation enriches faults and decides which KPIs are important? Google is a great source of truth. Consider deconstruction of the MIB into OIDs and google it. Based upon parsing the search results you may consider its worth of collection or not. Then, let’s use some of the solution we discussed already – fault storms and reduction. We can bubble up anomalies and chronics with zero human touch. How accurate it could it be? This should be surprising. You could always have an organic gamification engine to curate. Think about it from a possible results. No rules, No human touch, No integration costs, only ramp time. An interesting idea.

Are We Really Impacting the Customer?

I know we have all heard this one before. Service impact. How do you know if a fault is service impacting or not? If you notify a customer they are down and they are not – they lower their opinion of you. Flip it around they hate you. Understanding impact is a common problem. Common industry practice is leverage a common event type category – think trap OID name. The problem is that it over simplifies it and their is a lot of guess work in those rules (see above). What if they fault is on a lab environment? Is there no traffic on that interface? What if its redundancy is active or failed? Too much complexity. This is machine learning’s sweet spot. Imagine a backfill from ticketing to show that the customer confirms there was an impact. Than linking that data pool to the model of faults. Where you can compare that model to a current situation to score a likelihood of impact. That way you are using a solid source of truth, the customer, to define the model. — UPDATE — It’s true you could use network probes and they could scan the data to ensure the service is being used. Pretty expensive solution IMHO, buying two probes for every network service. It would be cheaper to use Cisco IPSLA/Juniper RPM or Rping MIB.

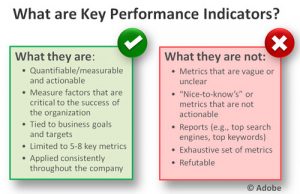

Which KPI is Important?

Last idea I have seen is around service quality management. In service management, customers complain about templates and models need to be pre-defined. Typical SLAs do not have the detail required to support a technology model to track them. The research required to determine the performance metrics that drive takes too much time and effort. With machine learning and public algorithms like “Granger causality” a new possible emerges. The service manager can identify and maintain the model — whatever the product offered. How could it work? My thought is simple using root-level metrics – availability, latency, bandwidth to provide baseline. All other vendor OIDs or custom KPIs available can be collected and stored. With machine learning, you can develop the models for each root metric and each custom metric. Using artificial intelligence, you can identify which custom metrics predict the degradation of a root one. So those are the metrics you want to poll more frequently, have higher priority, and power service quality metrics. The result would be fewer high frequency polling. Then more meaningful prediction for service quality management.

Let me know your thoughts. These are some of the crazier ideas I have seen/heard, but I am sure you have heard of others.

Article Map

- My Journey into Machine Learning with AI

- The Technology of Machine Learning with AI

- Challenges Addressed by Machine Learning with AI

- An Umbrella for Fault Storm Management

- Minimizing Faults with Machine Learning and AI

- Learning Your Operational Performance

- Automated Detection & Mitigation of Chronic Issues

- Service Assurance’s Future with Machine Learning

- End of the Beginning for Machine Learning

Serial entrepreneur and operations subject matter expert who likes to help customers and partners achieve solutions that solve critical problems. Experience in traditional telecom, ITIL enterprise, global manage service providers, and datacenter hosting providers. Expertise in optical DWDM, MPLS networks, MEF Ethernet, COTS applications, custom applications, SDDC virtualized, and SDN/NFV virtualized infrastructure. Based out of Dallas, Texas US area and currently working for one of his founded companies – Monolith Software.