Monthly Archives: June 2017

Intelligent Approach to Smart Cities

Smarter Smart Cities

At the Smart City Dublin forum, the subject was how municipalities can save money and better enable citizens. These opportunities are not driven by cities, but by service providers offering new services. Cities have assets, like right-of-ways. They have advancing needs, like tourism empowering free wifi. Governments have challenges, like reducing budgets and stodgy policies. While other providers may shy away, many see these challenges as possible revenue.

Simple Concept

Where does service assurance come in?

Follow Shawn on Twitter

Predicting the IoT World

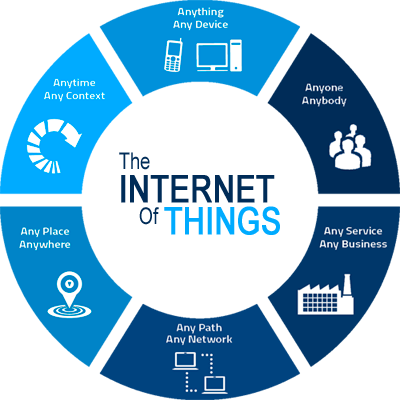

What are we going to do in the IoT world?

My typical response to service providers is, “well, that was last week…” All kidding aside, we live in the connected generation. Network access is the new oxygen. The price to be paid is complexity and scale. A good reference for what IoT use cases exist is this bemyapp article about Ten B2B use cases for IoT.

Common Threads

Its best to categorize them into three buckets. Environmental monitoring of smart meters to reduce human interaction requirements. Tracking logistics through RFID is another common trend with IoT communities. The most common is client monitoring. With mobility, handset tracking and trending is common in CEM. When considering an access network its monitoring the cable modems for millions of customers. Which ever category your use case may be, the challenges will be similar. How do you deal with the fact that your network becomes tens of millions of small devices instead of thousands of regular sized devices? How do you handle that fact that billions of pieces of data need to be processed, but only a fraction would be immediately useful? How can you break down the network to human understandable segmentations?

The solution is simple

With a single source of truth, you can see the forest through the trees. While the “things” in IoT are important, how they relay information and perform their work are equally important. Monitoring holistic allows better understanding of the IoT environment – single point solutions will not address IoT. Normalizing data enables for higher scale, while maintaining the high reliability.

How to accelerate

Now that the network has been unified into a single source of truth, operations can start simplification of their workload. First step, become service oriented. Performance, fault, and topology is too much data – its the services you must rely upon. How are the doing, what are the problems, how to fix them, and where you need to augment your network. Next up, correlate everything – you need to look at the 1% of the 1% of the 1% to be successful. KQIs are necessary, because the trees in the forest are antidotal information – the AFFECT. Seeing the forest (as the KQI) allows you to become proactive and move quicker, be more decisive because you understand the trends and what is normal. Its time to stop let the network manage you, and start managing your network.

End Goal is Automation

After unifying your view and simplifying your approach, its time to automate. The whole point of IoT is massive scale and automation, but if your SA solution cannot integrate openly with the orchestration solution, how will you ever automate resolution & maintenance? We all must realize, human-based lifecycle management is not possible at IoT scale. Its time to match the value of your network with the value of managing it.

Assuring quality real-time services

Traveling from trade shows

Coming back from a trade show I took my Uber back to the airport, oddly enough I experience the value of real-time services. As most, I leverage the Uber ride-share service. My reasons are as others: connivence, price, quality, etc. In the past, I have typically taken taxis — which are twice the cost.

As we are driving, the drivers phone beeped. It told him that there was an accident up ahead and we needed to divert. Interestingly, my phone beeped as he said this and I got the same message showing a red line up ahead. The driver stated this was one of the reasons he switched to Uber, being a long time tax cab driver. Because other Uber drivers are constantly, autonomously reporting traffic (way more than cab drivers do) he spends more time driving and less time in traffic. He drives more customers and makes considerably more money. The customers are happier, online bill pay provide less hassle – he drives, that is all he worries about. The cost of Uber? For him nothing, the passengers do that. He drives and gets paid. And is nice – offered me a paper (quaint) and free bottle of water before boarding.

The moral of the story…

Uber based in California, 6,000 miles and 9 hours time difference away. Using AWS hosting, it allows real-time automatic cross matching of traffic to make lives a little easier a world away. The mini to the macro at work here. This 60+ year old driver, driving all his life, reaps the benefit. I pay an extra 2e, 40% reduction in rates, smoother ride in a new car, and nicer driver — that is value for the customer. What makes this miracle possible? Realtime digital services. Uber and others like them are winning the battle by pushing realtime digital services using LTE; competing against taxi cabs with CB radios. As the newspaper industry realized already, the taxi cab industry will soon become… quaint…

My question to you? What is your realtime service? What does it mean to your business? How do you assure it to continue to be realtime?

Drop me a message @Shawn_Ennis, I would love to talk about your real-time services.

PS. Thanks T-Mobile for included international roaming. Uber would not have been possible without you…