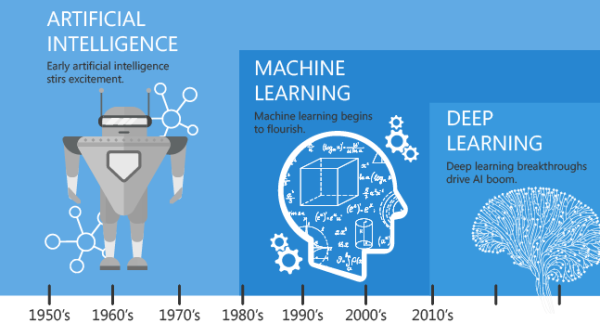

The technology of machine learning with AI should be our first focus. As with all new technology it has new terms and new concepts. Several of these are heretical to the status quo. Its important to set a proper context so we can have serious discussions.

Good to my word, here is the first blog in the series on machine learning with AI in service assurance. To explain some of the solutions in the marketplace, first we need to talk about the technology. The terms and concepts are new. The goals are the same for operations: increasing automation, increasing quality.

Defining Machine Learning

Let’s start on machine learning. First check out the wikipedia article. Below is the definition:

“Machine learning is a field of computer science that often uses statistical techniques to give computers the ability to “learn” (i.e., progressively improve performance on a specific task) with data, without being explicitly programmed.”

I summarize it like an ant farm. The farm, made by worker ants, creates the tunnels. Once done the model is complete. In this case, ants are the machine learning. As days go on and if necessary the ant farm will change. Then, say the farm falls over (oops). So the ants have to re-build and new pathways in the model, updating them. The fact is the ant farm is a always changing and learning from the environment. Like the ants, machine learning builds and maintain the patterns, or as I call it models. These models compare to the current situation in real-time. Artificial intelligence can see if they align (chronics) or diverge (anomalies).

Defining AI

Now AI as defined by wikipedia as “the study of intelligent agents: any device that perceives its environment and takes actions that maximize its chance of successfully achieving its goals.“

The complexity of AI is a spectrum. On one side some AI is cognitive, virtual reasoning. On the other, AI is nothing more than rules processing. When in the context of machine learning, AI is usually applying or comparing models. This is what machine learning has learned with a separate set of data. In the context of service assurance, doing the comparison is the value. Comparing the past with present or projecting the future using the past. This provides analytics with insights.

Understanding Anomalies

When performing a compare, either they align or diverge within some set degree. If the current situation is a repeat of the past, you have detected a chronic situation. When the current situation is new and unusual its called an anomaly. Datascience.com defines three types of anomalies:

- Point anomalies: A single instance of data is anomalous if it’s too far off from the rest. Business use case: Detecting credit card fraud based on “amount spent.”

- Contextual anomalies: The abnormality is context specific. This type of anomaly is common in time-series data. Imagine spending $100 on food every day during the holiday season is normal, but may be odd otherwise.

- Collective anomalies: A set of data instances collectively helps in detecting anomalies. Imagine someone is trying to copy data form a remote machine to a local host. If this odd, an anomaly that would flag this as a potential cyber attack.

Consider chronics as a “negative” anomalies. Many customers I have talked to, see chronic detection being the most common AI tool to have. Both anomalies and chronics are important AI tools operations can use to better monitor their estate.

Focusing on Service Assurance

This blog focuses on technology as it applies to service assurance (my major focus). Service assurance, as defined by wikipedia, is:

“The application of policies and processes by a Communications Service Provider to ensure that services offered over networks meet a pre-defined service quality level for an optimal subscriber experience.”

Otherwise defined as assuring the quality of the service. Two ways to increase the quality of your services. One, automate resolutions, thus reducing how much issues impact customers. Two, proactively addressing issues before they become problems and problems before outages.

Increasing Automation with Machine Learning

Increasing automation, reducing downtime, is always a goal for operations. Machine learning with AI tools focus on correlation. The ability to segment faults into new terms like “situations”. Addressing each situation is key, but they must be actionable. Any correlation would leverage a machine learning built model. Then compare that model against the current fault inventory to produce the segmentation. This is the current focus of the industry today with mixed results as we will discuss later.

Being Proactive with Machine Learning

Being proactive is another buzzword from the late 90s — that was a decade ago right? The reality is its hard, you need to provide educated guesses. You can make quality guesses if you don’t have the data. Data reduction, prevalent in the industry, has caused operations to discard 99% (or more) of their data. Without this data your guesses will be poor. A machine learning model with AI can leverage low level information that may predict a future outage. That prediction will give operations lead time to fix the issue before an outage occurs. This is the hype focus on the industry today.

It is important to provide context when discussing machine learning with AI. This helps the us all understand the technology to enable the deeper discussion. The concepts and terms are new, but the industry hasn’t changed. Next up will be applying this technology to problems experienced by the industry.

Article Map

- My Journey into Machine Learning with AI

- The Technology of Machine Learning with AI

- Challenges Addressed by Machine Learning with AI

- An Umbrella for Fault Storm Management

- Minimizing Faults with Machine Learning and AI

- Learning Your Operational Performance

- Automated Detection & Mitigation of Chronic Issues

- Service Assurance’s Future with Machine Learning

- End of the Beginning for Machine Learning

Serial entrepreneur and operations subject matter expert who likes to help customers and partners achieve solutions that solve critical problems. Experience in traditional telecom, ITIL enterprise, global manage service providers, and datacenter hosting providers. Expertise in optical DWDM, MPLS networks, MEF Ethernet, COTS applications, custom applications, SDDC virtualized, and SDN/NFV virtualized infrastructure. Based out of Dallas, Texas US area and currently working for one of his founded companies – Monolith Software.