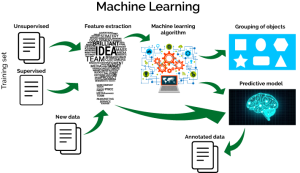

A great topic of conversation is fault reduction. First let’s not confuse terms. Data reduction is discarding data deemed not actionable. Fault reduction is prioritizing the fault stream. This enables the most impactful and actionable faults bubble up to the top. You should never ignore faults. They are pointing to a problem that may cause an outage in the future. Fault reduction is a challenging field of service assurance. There are some many ways to cheat the system — as in simply deleting non-actionable faults. But let’s get serious. Where we should focus is on the best practice. That is identification and prioritization of faults that enabling filtering. This blog describes minimizing faults with Machine Learning and AI. You can be the judge of the methodology.

Understanding Fault Noise

Imagine if you will a universal “noise” level for operations. Currently there are tons of outages, so operations only work on outages – they want no noise. Outages are usually straightforward and actionable. You may want to use maintenance window filters. Then verify that the services affected are in production. Many filters are straightforward. The trouble is moving beyond the outages or dealing with outages that are not actionable. Let’s talk the first – problems and issues. Problems impair a service, but not affect it. Say a loss of redundancy as an example. Usually you need two problems before the situation becomes an outage. Issues are things like misconfigurations that complicate things and can cause problems. The trouble is a mature, legacy network has 10s-100s of outages. With an exponential amount of problems. Then issues are exponential to problems. You are talking information overload. How do you rank them? Well that is where ML/AI is being leveraged. The secret ingredient is statistical rarity. If the problem or issue is new and unusual there is a greater chance of a quick fix. The less rare it is, the more likely it is not actionable. But less test my hypothesis…

The Rogue Device Example

For example, a rogue device. Let’s say someone adds a new device to network without following best practices. Receiving traps from the device, but nothing else — they are a rogue device. When a new device first alarms, creating an anomaly. This kicks off automation that validates configuration. This opens a ticket for manual intervention upon failure. The net results is zero human intervention. This follows best practice; no quasi monitored production devices exist in the network.

Dueling Interfaces

Another examples is interface monitoring. Let’s say two interfaces on a switch are down. One happens all the time, the other rarely occurs. Which do you think is more actionable? With ML/AI technology, you can create a model based upon Device/Interface occurrence. If the current situation indicates breaking that model you can enrich the alarm that is more rare. This way operations can focus their time, when that is a constraint, on the more actionable fault. The result would be addressing what is easy, then working on what harder later. With prioritization, operations can increase their efficiency. This also maximizes the value to the organization as a whole.

Reduction of the fault stream is something everyone wants to do. We must remember there are good and bad ways to achieve it. A good way is to rank your fault stream using rarity. ML/AI technology can help leverage rarity. This increases operational efficiency. This is yet another advantaging of leverage event analytics for real-time operations.

About the Author

Serial entrepreneur and operations subject matter expert who likes to help customers and partners achieve solutions that solve critical problems. Experience in traditional telecom, ITIL enterprise, global manage service providers, and datacenter hosting providers. Expertise in optical DWDM, MPLS networks, MEF Ethernet, COTS applications, custom applications, SDDC virtualized, and SDN/NFV virtualized infrastructure. Based out of Dallas, Texas US area and currently working for one of his founded companies – Monolith Software.