The IoT/IoE generation has been born. Now countless things are about to be inter-connected. We all see the hype is non-stop, but there many things are becoming a reality. AT&T/Maersk closed a deal back to 2015. This recently became a reality for asset tracking cold shipping containers. Now, Uber is providing driverless trucks to deliver beer. While GPS trackers are being used to track the elderly. These services are being ubiquitous and common. We are seeing the use cases have variety and are growing in depth. But we also see that IoT is a very pioneering field. If IoT managed services are to exist, operations will need to manage them. The goals here is to start asking key questions. The hope is through analysis we can provide some answers. Let’s discuss the key concepts driving the new field of IoT Service Assurance.

Key Perspectives for IoT Service Assurance

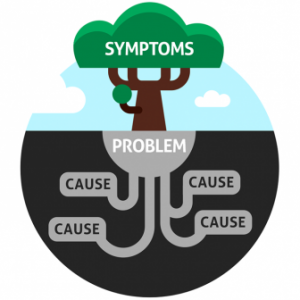

For any IoT service, you must understand who uses it and who provides it. As I explain it, there are three key perspectives for IoT services. First, you have the network provider. They provide the network access for the “thing”. The “network” could mean LTE or Wifi or any other technology. Network providers see the network quality has the focus. This is similar to typical mobile providers. Compare that to IoT services monitored with an application focus. Its about monitoring the availability and performance of the “things”. You want to make sure they are working. Lastly, you may not care about the “things“. Perhaps you only care about the data from the them. Performing correlation and understanding the “sum of all parts” would be the key focus. These perspectives drive your requirements and the value prop. Through them, you can define quality and success criteria for your IoT services.

Key Requirements of IoT Service Assurance

Before we get to far along, let’s first talk about terminology. In the world of IoT, what is a device? We have to ask, is this “thing” a device? With the world of mobility, the handset is not a devices its an endpoint. So is the pallet being monitored in the cold shipping container a device or an endpoint? Like the perspectives that drive your requirements, we should agree on terminology. Let’s talk some use cases to better understand typical requirements.

Smart Cold Storage

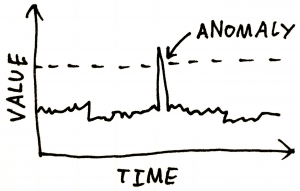

In the Maersk use case, let’s say the initial roll-out listed as 250k sensors on pallets. These sensors, at regular intervals, report data in via wireless burst communications. The data includes KPIs that drive visibility and business intelligence. Some common examples I have found are: temperature, battery life, and vibration rate. Other environmental KPIs required can exist: light levels, humidity, and weight. As we have discussed, location information with signal strength could be useful. We can track in real-time to provide trend and predication. One would think it would be best to know a failure before putting the container on the boat.

Bottom line is would have about around 25 KPIs per poll interval. Let’s do some math for performance data. Estimate 250k sensors * 25 kpis * 4 (15 min polls, 4/hour) * 24 (hours/day) = 600 million data points per day. If you were to use a standard database storage (say mysql) you would require 200GB per day. Is keeping the sensor data worth $300/month per month of data on AWS EC2? Storage is so inexpensive, real-time monitoring of sensor data becomes realistic.

Now faults are different. Some could include failed reconnects and emergency button pushed scenarios. These faults could provide opportunities. Shipping personnel can fix the container before the temperature gets too warm. Faults could provide an opportunity to save valuable merchandise from spoilage. Together this information combines to provide detailed real-time IoT Service Assurance views.

Driverless Trucks Use Case

Let’s look at another use case: Uber with driverless trucks. The Wired article does not include how many cars, so let’s look at UPS. UPS has >100k deliver trucks. Imagine if these logistics were 100% automated. This would create a tons of “things” on the network. The network, controller, and data would work together to provide a quality IoT service.

First, let’s look at performance data. The KPIs should be like the Maersk example. Speed, direction, location, and range would be valuable real-time data. Service KQIs like ETA and number of stops remaining would be drive efficiencies. Let’s do the same math as the Maersk example. Say 100k trucks * 50 kpis * 4 (15 min polls, 4/hour) * 24 (hours/day) = 480 million data points per day. So $240/day per day on AWS. This shows that storage and requirements are practical for driverless logistics.

Now some faults would include vital real-time activity. Perhaps an ‘out-of-gas’ event or network errors. Getting real-time alerts on crash would definitely be useful. So fault management would be a necessity in this use case. Again, there are plenty of reasons to create and leverage real-time alerts.

Another use case would be smart home monitoring, like Google Nest or Ecobee. These OTT IoT providers track and monitor things like temperature and humidity. There is no fault data and no analytics. The amount of homes monitored by Nest or Ecobee is not readily available on the internet. According to Dallas News, there are 8 million thermostats sold yearly. According to Fast Company, Ecobee has 24% marketshare, so 2 million homes per year. Ecobee has been in business for more than 5 years, so assume they have 10 million active thermostats. Doing some math, we have 10M homes, 10 kpis * 4 (15 min polls, 4/hour) * 24 (hours/day) = 10 billion data points per day. So that would be around $4800/day per day on AWS.

IoT Service Assurance is Practical

What is interesting about these use case are their practicality. Scalability is not a problem with modern solutions. All three cases show that from any perspective. Real-time IoT service assurance is achievable. I am amazed how achievable monitoring can be for complex and IoT services. Now you must asked the questions “why” and “how”. To answer these questions, you must understand how flexible your tools are. What value can you get from them.

Understanding Flexibility of IoT Service Assurance

Let’s discuss flexibility. First, how difficult is collecting this data? So let’s focus this in the world of open APIs. The expectation is these messages would come through a load balanced REST application server. I can image that 600 million hits per day is 2.7k hits/sec. This is well within apache and load balancer tolerances. As long as the messaging follows open API concepts collection should be practical. So from a flexibility, assuming you embrace open APIs, this is practical as well.

Understanding the Value of IoT Service Assurance

Its a fact, real-time is a key need in IoT Service Assurance. If whatever you want to track can wait 24/48 hours before you need to know it, you can achieve it with a reporting tool. If all you need is to store the data and slap a dashboard/reporting engine on top, then this becomes easy. Start with open source databases like mariaDB are low cost and widely available. Next, add a COTS dashboards and reporting tools like Tableau provide a cost-effective solution.

In contrast, Real-time means you need to know immediately that a cold storage container has failed. Being able to automate dispatch to find the closest human and text that operator to fix the problem. Real-time means that you have delivery truck on the side of the road and need to dispatch a tow truck. Real-time IoT Service Assurance means massive collection, intelligent correlation, and automated remediation. Now let’s look at the OTT smart home as a use case. The NEST thermostat is not going to call the firehouse when it reaches 150F. Everything is use case dependent, so you must let your requirements dictate the tool used.

Lessons Learned for IoT Service Assurance

-

IoT-based managed services are currently available and growing

- Assuring them properly will require new concepts around scalability and flexibility

-

With IoT, you must always ask how far down is it worth monitoring

- Most all requirements include some sort of geospatial tracking or correlation

My advice on IoT Service Assurance

- As always, follow your researched requirements. Get what you need first, then worry about your wants.

- Make sure you have tools with a focus on flexibility, scale, and automation. This vertical has many fringe use cases and they are growing.

- IoT unifies network, application, and data management more than any other technology. Having a holistic approach can provide a multiplying and accelerating affect.

About the Author

About the Author

Serial entrepreneur and operations subject matter expert who likes to help customers and partners achieve solutions that solve critical problems. Experience in traditional telecom, ITIL enterprise, global manage service providers, and datacenter hosting providers. Expertise in optical DWDM, MPLS networks, MEF Ethernet, COTS applications, custom applications, SDDC virtualized, and SDN/NFV virtualized infrastructure. Based out of Dallas, Texas US area and currently working for one of his founded companies – Monolith Software.